Before you use any AI tool in your therapy or counselling practice, it’s important to make sure it’s safe, secure, and compliant with Canadian privacy laws.

This guide will help you understand how to evaluate AI tools generally, then focus on one of the most widely adopted use cases in mental health: AI note taking.

Why AI Tools Must Be Carefully Vetted in Mental Health

AI tools must be carefully vetted in mental health settings because they often interact with personal health information (PHI) and client communications.

Whether you’re considering an AI note taker, an appointment assistant, or a mood-tracking app, the stakes are higher in healthcare. Client trust, ethical standards, and legal compliance all depend on how responsibly you choose and use digital tools.

What to Look for in a Safe and Compliant AI Tool

Before integrating any AI tool into your mental health practice, which you might use for scheduling, admin support, client engagement, or clinical use, you need to evaluate its privacy, compliance, and purpose-fit.

Here are the broad categories to assess for any AI technology:

1. Privacy and Data Handling

A compliant AI tool should follow strict privacy protocols and clearly outline how data is collected, used, stored, and deleted.

- Is the tool transparent about how it handles information?

- Does it offer secure storage and data encryption?

- Can you delete or export data if requested?

2. Jurisdiction and Data Residency

Data storage location matters, especially in provinces like British Columbia or Nova Scotia where data residency laws apply.

- Is the data stored in Canada?

- If stored elsewhere, are there contracts or safeguards in place (e.g. standard contractual clauses)?

3. Vendor Transparency and Intent

Not all AI tools are built for healthcare. General-purpose tools may lack the protections required for clinical use.

- Is the tool designed for use in healthcare or professional services?

- Does the vendor provide clear privacy policies, compliance documentation, or support for regulated industries?

4. Scope of Use

Some tools may be acceptable for administrative tasks (e.g. appointment reminders), but not for anything involving personal health information (PHI).

- What kind of data is the AI tool handling?

- Does it touch or process any information considered health-related or confidential?

If an AI tool meets these basic expectations, you can then do a deeper compliance check, especially if it’s involved in clinical documentation or sensitive client communication.

Other Types of AI Tools Mental Health Professionals May Consider

Some AI tools can support clinical efficiency and practice operations if used responsibly and within ethical boundaries.

Here are examples of tools that may be appropriate for your practice, if they meet privacy and compliance requirements:

- Practice Management Platforms with AI Features: Some platforms include smart scheduling, billing, and documentation automation.

- AI for Clinical Decision Support: AI tools that track progress or assess patterns in PHQ-9/GAD-7 scores may help identify client needs early but should never replace clinical judgment.

- AI Chatbots or Intake Assistants: Some therapists use chatbots for website inquiries or routine scheduling. These should never be used to provide clinical advice unless specifically designed and compliant for healthcare use.

- AI for Admin or CPD: You can safely use general AI tools for personal research, content summarization, or psychoeducation development, but never input identifiable client data.

Remember, even for low-risk tools, always vet vendors for privacy standards and document your rationale for using AI in clinical or operational workflows.

Why AI Note Taking Tools Deserve Extra Attention

AI note taking tools require deeper scrutiny because they often handle full transcripts or summaries of therapy sessions.

Unlike scheduling software or admin bots, AI note takers process highly sensitive content that may include names, diagnoses, trauma disclosures, or clinical impressions. As such, they are subject to strict privacy standards and ethical considerations.

What Makes an AI Note Taking Tool Safe and Compliant?

A safe and compliant AI note taking tool protects client information, gives you full control over notes, and meets Canadian legal and regulatory standards.

Here’s how to evaluate an AI note taker before bringing it into your practice:

1. Health Data Compliance (PHIPA/PIPEDA)

- A safe AI note taking tool must be built to comply with Canadian healthcare privacy legislation. This includes:

- PHIPA (for Ontario-based clinicians) or PIPEDA (for federal and other provincial contexts)

- Documentation that shows how the tool protects personal health information (PHI)

- Vendor willingness to sign a data protection or confidentiality agreement

2. Data Storage and Residency

- The tool must offer secure data storage and adhere to any provincial residency requirements.

- Data is encrypted both in transit and at rest

- Data is stored in Canada, or in jurisdictions with equivalent privacy protections

- Option to store data locally or choose Canadian data centers, where possible

- Session audio or transcripts are not stored indefinitely unless explicitly required by the clinician

3. Clinician Control Over Notes

- You must be able to fully control the generation, review, editing, and storage of your notes

- You can review and approve AI-generated notes before saving

- You can edit content to reflect clinical accuracy

- You can delete or export session data at any time

- The tool does not lock you into proprietary formats

4. Client Transparency and Consent Support

- The tool should make it easy for you to maintain client trust and meet informed consent obligations

- The vendor provides guidance or templates for disclosing AI use to clients

- The tool allows you to disable AI features per client request

- Documentation includes privacy-forward explanations you can share with clients

5. Built for Healthcare Use

- The most trustworthy tools are designed for and marketed to healthcare professionals.

- The interface and workflows reflect therapy-specific needs (e.g. SOAP note templates)

- Features include session tagging, clinical vocabulary support, and documentation formatting

- The vendor has experience serving regulated professionals or clinics

6. Explanation of How the AI Works

- You should be able to explain how the AI generates its notes, even at a basic level, to a client or supervisor.

- Does the tool disclose whether it uses machine learning, templates, or human review?

- If it processes audio in real-time or stores it somewhere later?

- Are there any automated decisions made without your oversight?

AI Tool Resources for Your Practice

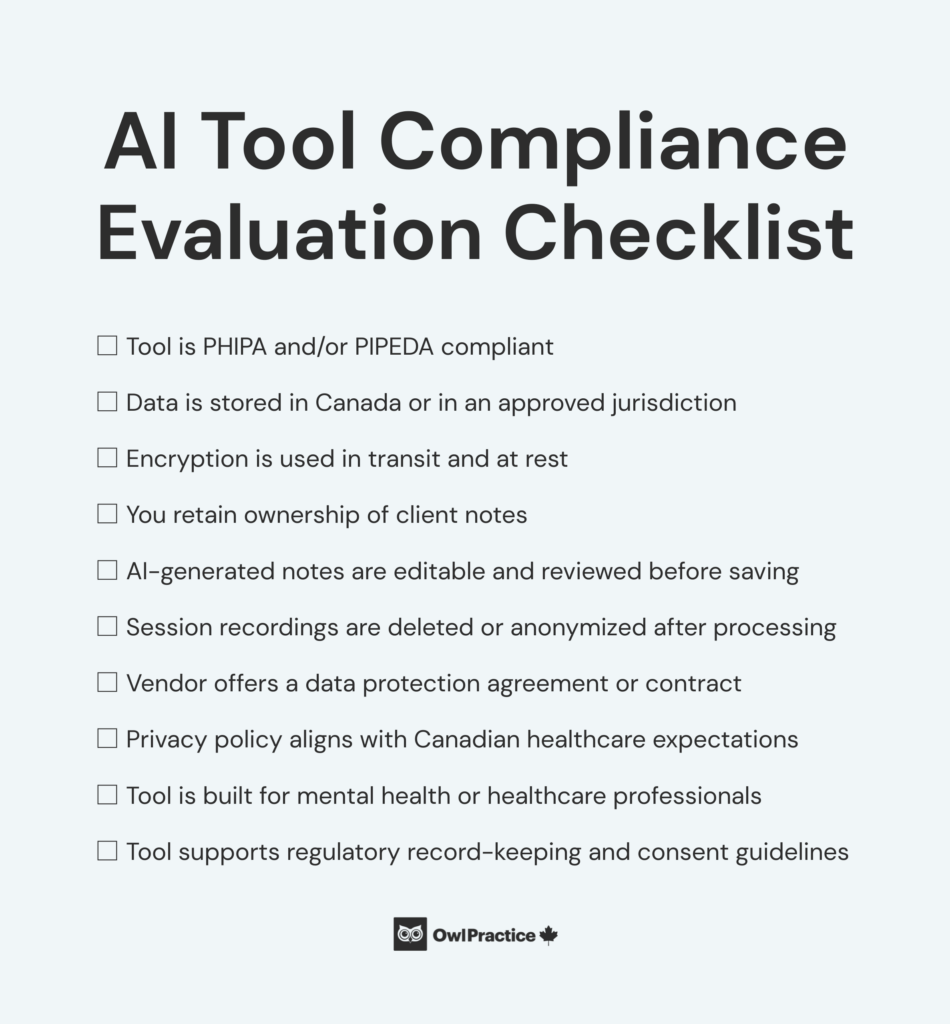

Use this checklist to ensure that an AI note taking tool is safe and compliant for your Canadian mental health practice:

Verbal Disclosure Script

“Just so you know, I use a secure AI-based tool to help generate clinical notes after our sessions. It meets Canadian privacy standards, and I always review the notes myself. Let me know if you have any concerns or prefer that I don’t use it for your sessions.”

Email Template for Clients

Subject: A Quick Note About How I Take Notes

Hi [Client Name],

I wanted to let you know that I’m using a secure AI-based tool to help with writing my session notes. It’s designed for healthcare professionals and complies with Canadian privacy laws. This helps me stay focused during our sessions while still maintaining accurate documentation.

If you have any questions or would prefer that I do not use this tool during our work together, please let me know.

Best,

[Your Name]

Best Practices for Using AI Note Taking Tools in Therapy

To use AI note taking tools ethically and safely, you should combine compliance with professional discretion.

- Always review AI notes before finalizing or storing

- Inform clients that you’re using an AI tool and explain how their data is handled

- Avoid using AI tools in high-risk or legally sensitive sessions

- Choose tools that minimize long-term storage of sensitive data

- Keep up with vendor updates and evolving privacy regulations

Final Thoughts

AI tools can offer real benefits to mental health professionals, from saving time on documentation to improving administrative efficiency. But in a profession built on trust, privacy, and ethical care, not every tool is a good fit.

When choosing an AI tool, especially one that touches clinical content or client information, your priority must be safety, security, and compliance. This goes beyond just reading marketing claims. It means asking the right questions, verifying documentation, and ensuring the tool aligns with both your legal responsibilities and professional obligations.

Remember:

- You are the health information custodian: Even when using third-party tools

- Privacy is not optional: It is a legal and ethical obligation

- Clients have a right to transparency: Especially when their sensitive information is involved

- Compliance is not just about where data is stored: How it’s managed, protected, and disclosed is important as well.

If you’re unsure about a tool, start by reviewing your college’s guidelines, asking the vendor direct questions, and consulting with privacy experts or legal professionals as needed.

By choosing tools that are purpose-built for healthcare and being intentional about how you integrate them into your practice, you can take advantage of AI’s benefits, while keeping your clients safe and your practice secure.

Frequently Asked Questions About AI Tools

Can I use AI note taking tools in Canadian private practice?

Yes, if the tool complies with privacy laws and college regulations. You must ensure the vendor meets PHIPA or PIPEDA standards and supports clinical record keeping practices.

Does my data need to be stored in Canada?

In some provinces, yes. British Columbia and Nova Scotia require data residency. In other provinces, data may be stored outside Canada if contractual safeguards are in place. Owl Practice stores all its client data in Canada so you can rest assured that it meets any provincial requirements.

Do I need to get client consent?

Yes, clients should be informed if you are using an AI tool to document sessions, and they must be given the option to ask questions or opt out.

How do I know if a tool is compliant?

Look for explicit claims of PHIPA or PIPEDA compliance and ask the vendor to provide supporting documentation. Ensure the tool is specifically designed for clinical use in regulated healthcare settings. Solutions like Owl Practice’s AI note taker are built with Canadian privacy laws in mind and are PHIPA-compliant, making them a trusted option for mental health professionals in Canada.

If the vendor does not clearly outline their compliance measures or cannot provide documentation, it’s safest to avoid using the tool in your practice.

Coming Soon: Owl Practice will be launching an AI Note Taker tool!

This AI Note Taking tool meets all the guidelines discussed in this article and is an optional add-on for your practice.

Learn more about Owl Practice’s upcoming feature, Smart Notes, here!

Reduce clinical administrative tasks and transform more lives with Owl Practice. Owl Practice provides all the tools you need to make your practice successful. Join the thousands of care professionals using Owl to run their practice every day.